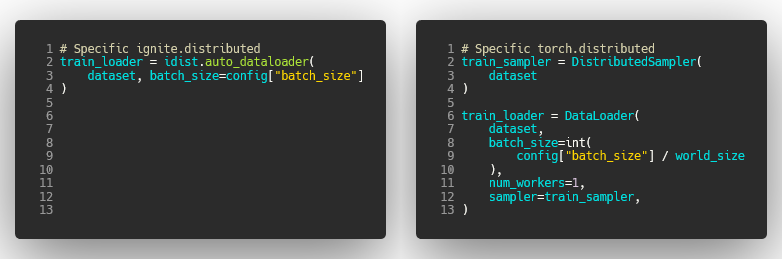

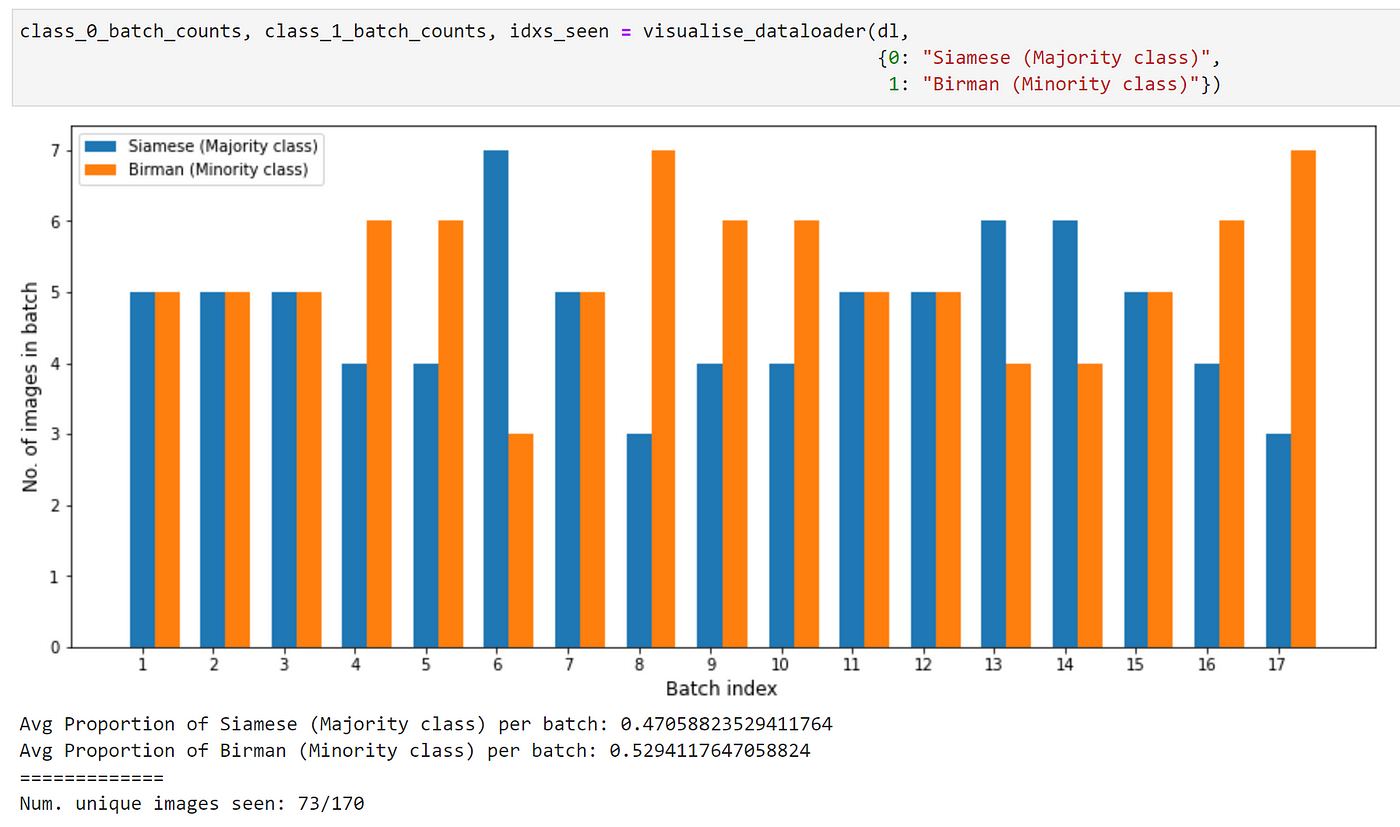

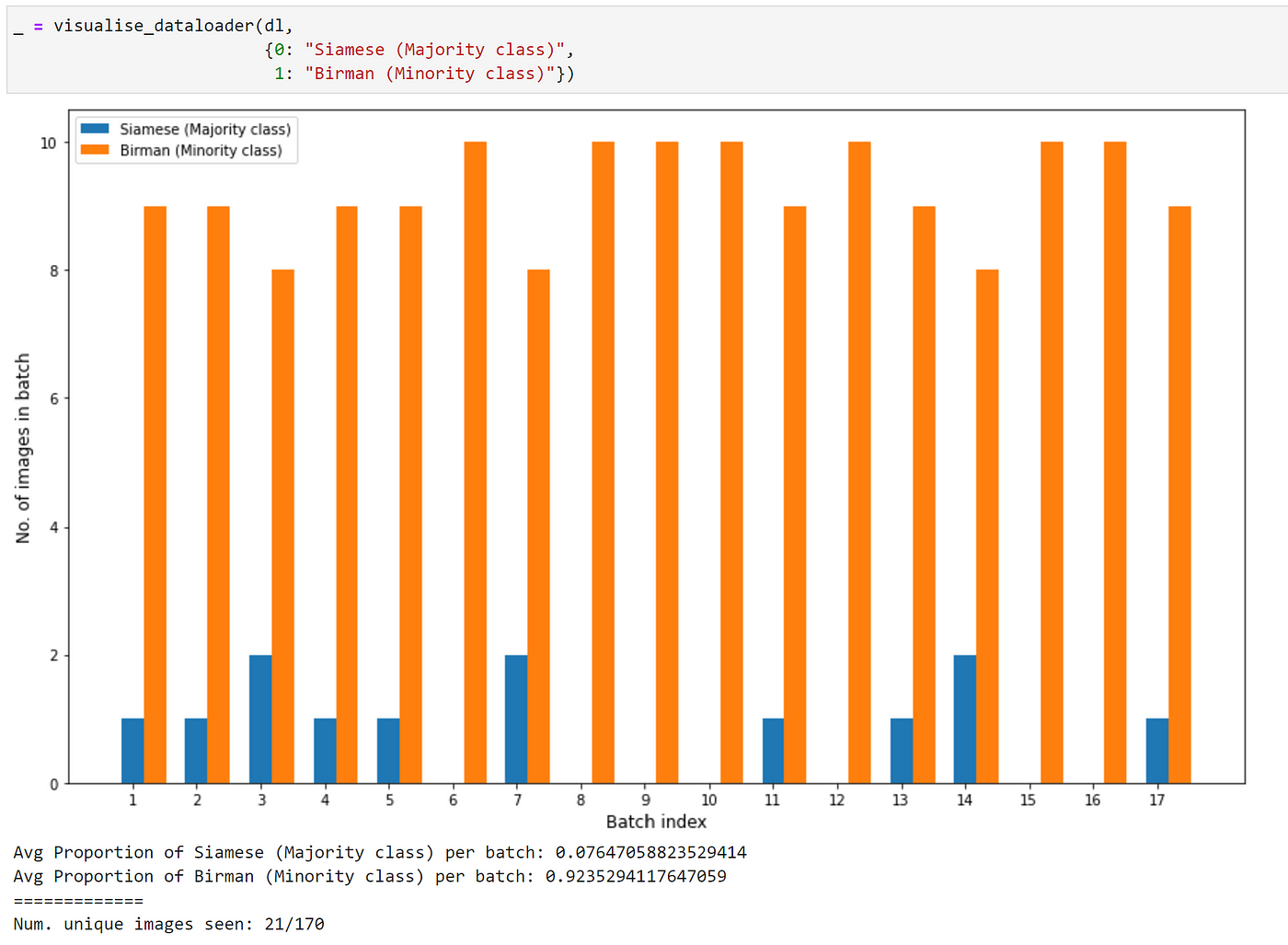

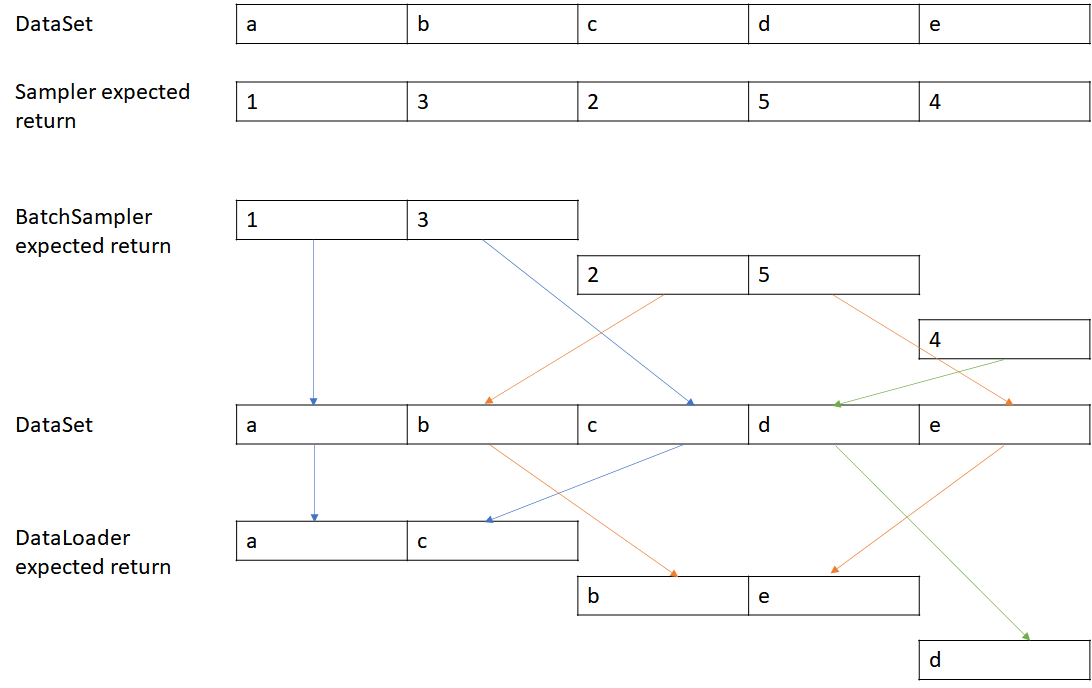

PyTorch Dataset, DataLoader, Sampler and the collate_fn | by Stephen Cow Chau | Geek Culture | Medium

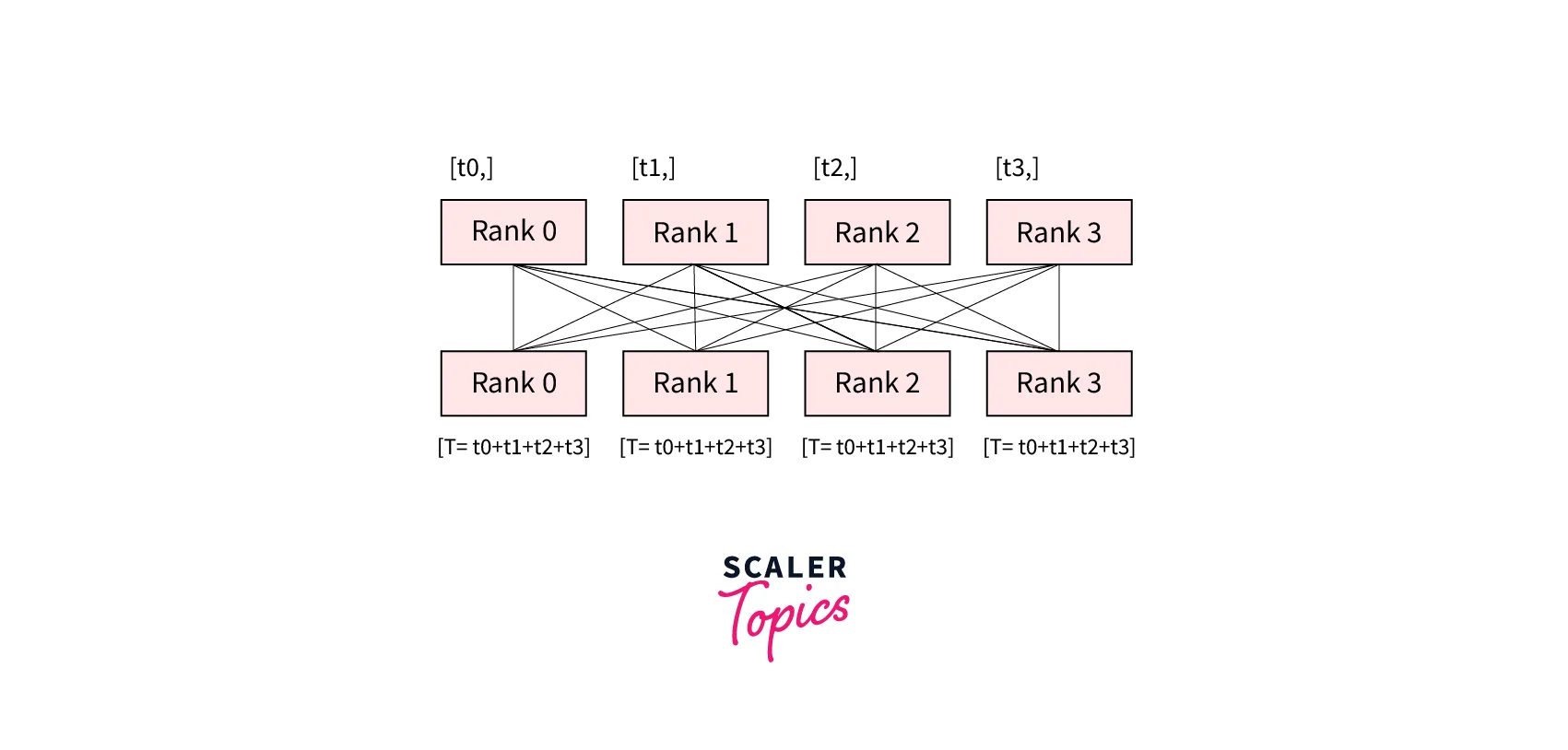

Getting Started with Fully Sharded Data Parallel(FSDP) — PyTorch Tutorials 2.2.0+cu121 documentation

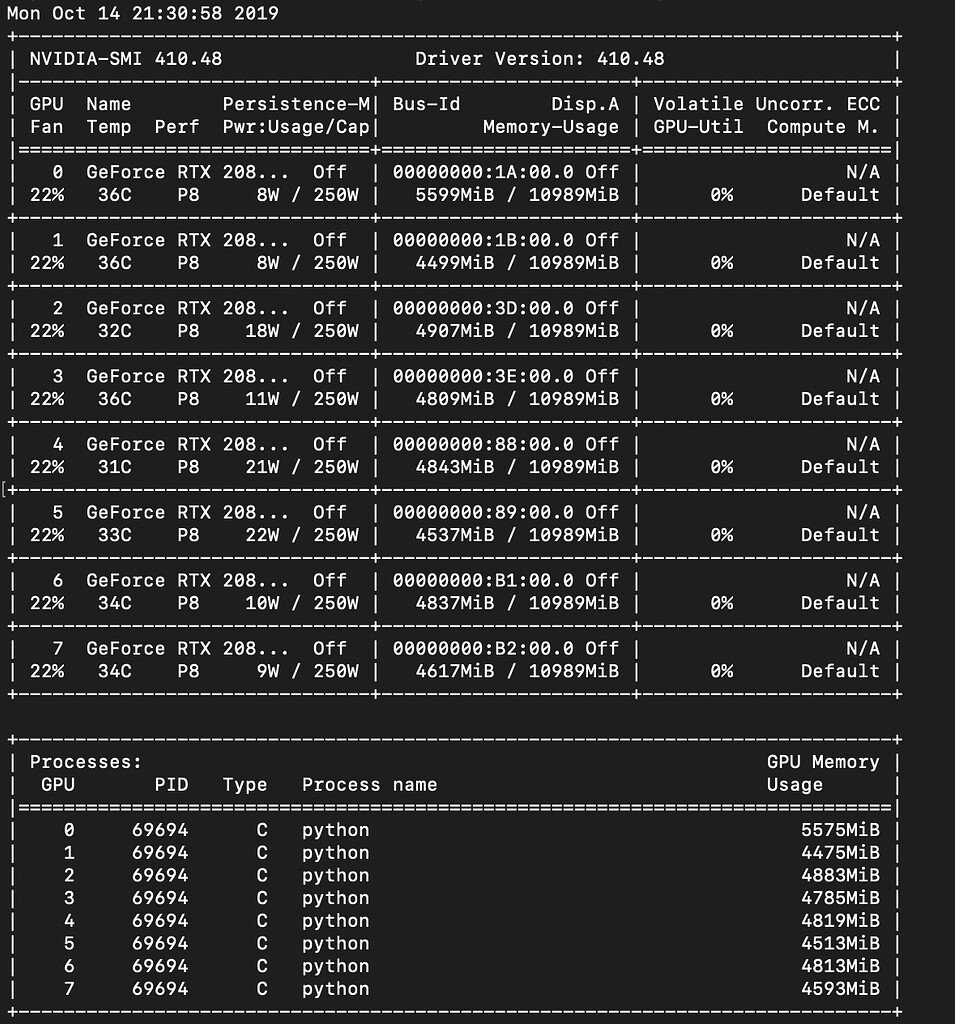

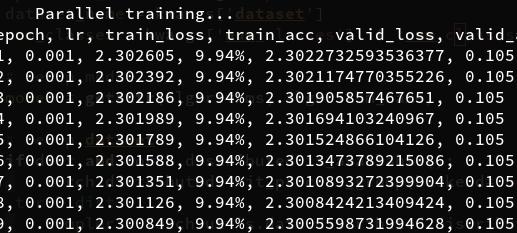

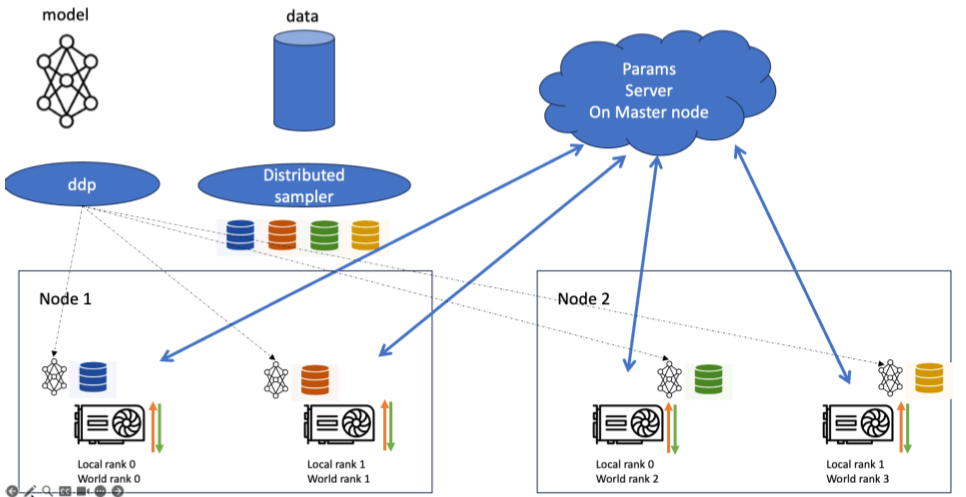

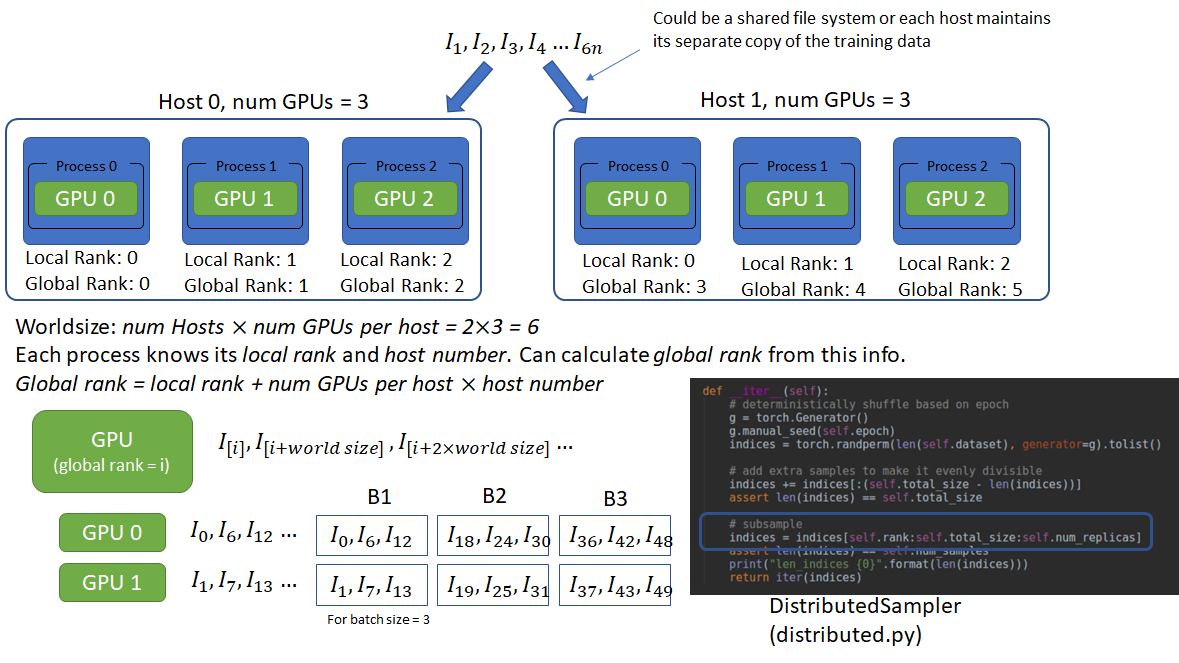

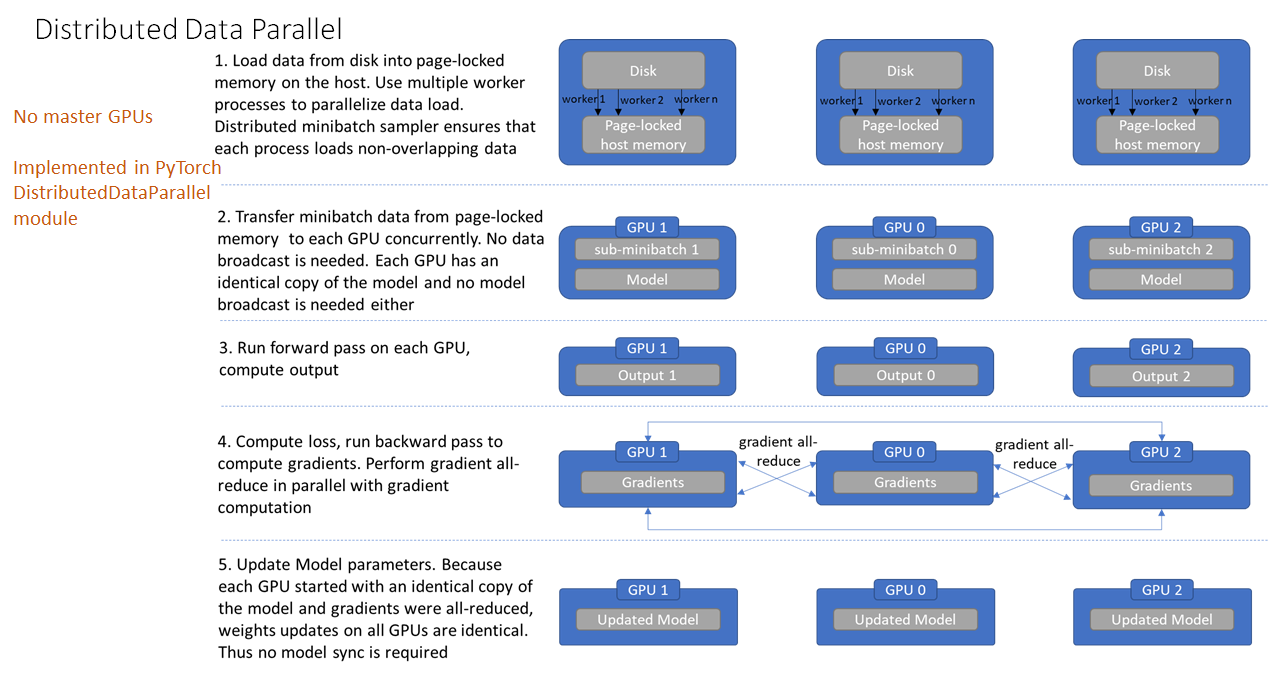

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer