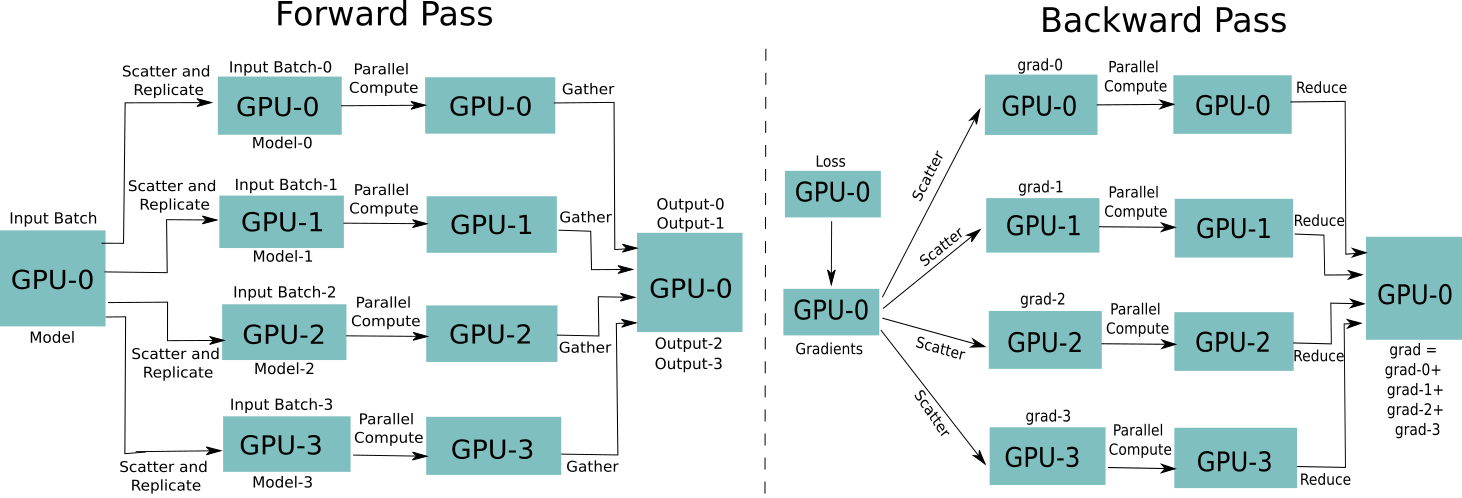

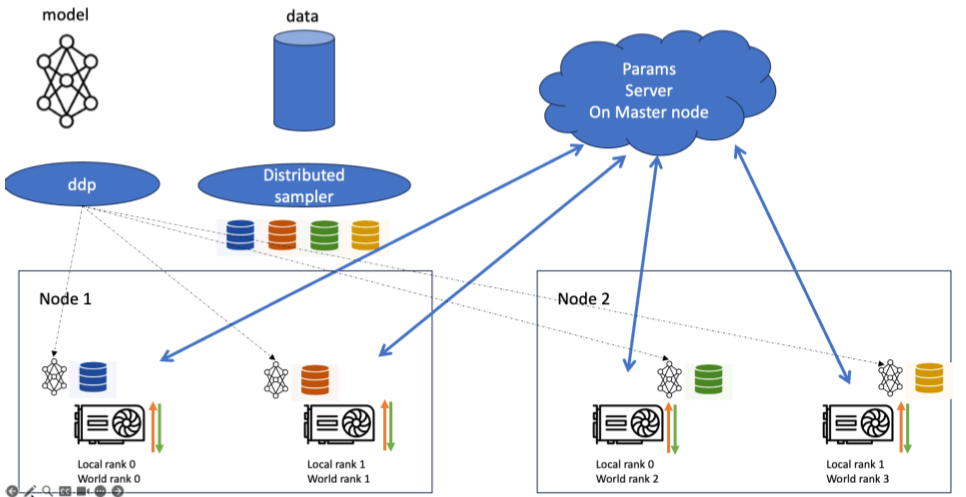

Multi-Node Multi-Card Training Using DistributedDataParallel_ModelArts_Model Development_Distributed Training

Getting Started with Fully Sharded Data Parallel(FSDP) — PyTorch Tutorials 2.2.1+cu121 documentation

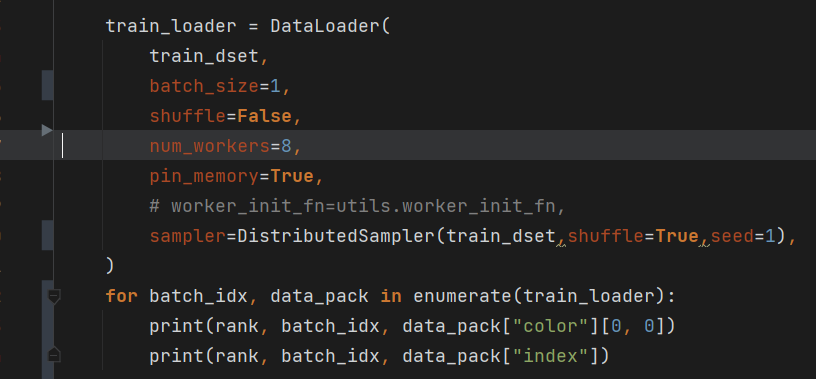

Feature request] Let DistributedSampler take a Sampler as input · Issue #23430 · pytorch/pytorch · GitHub

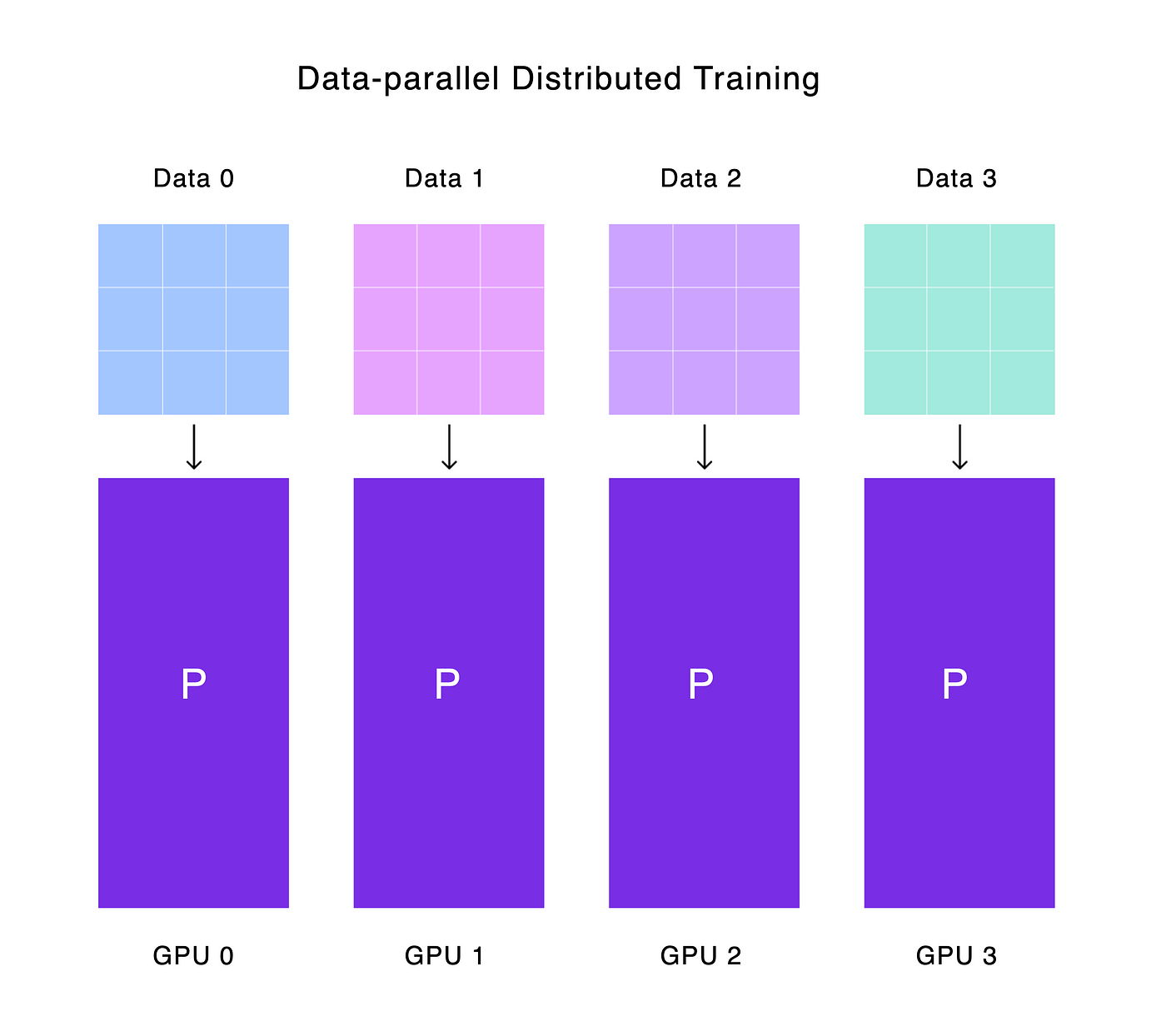

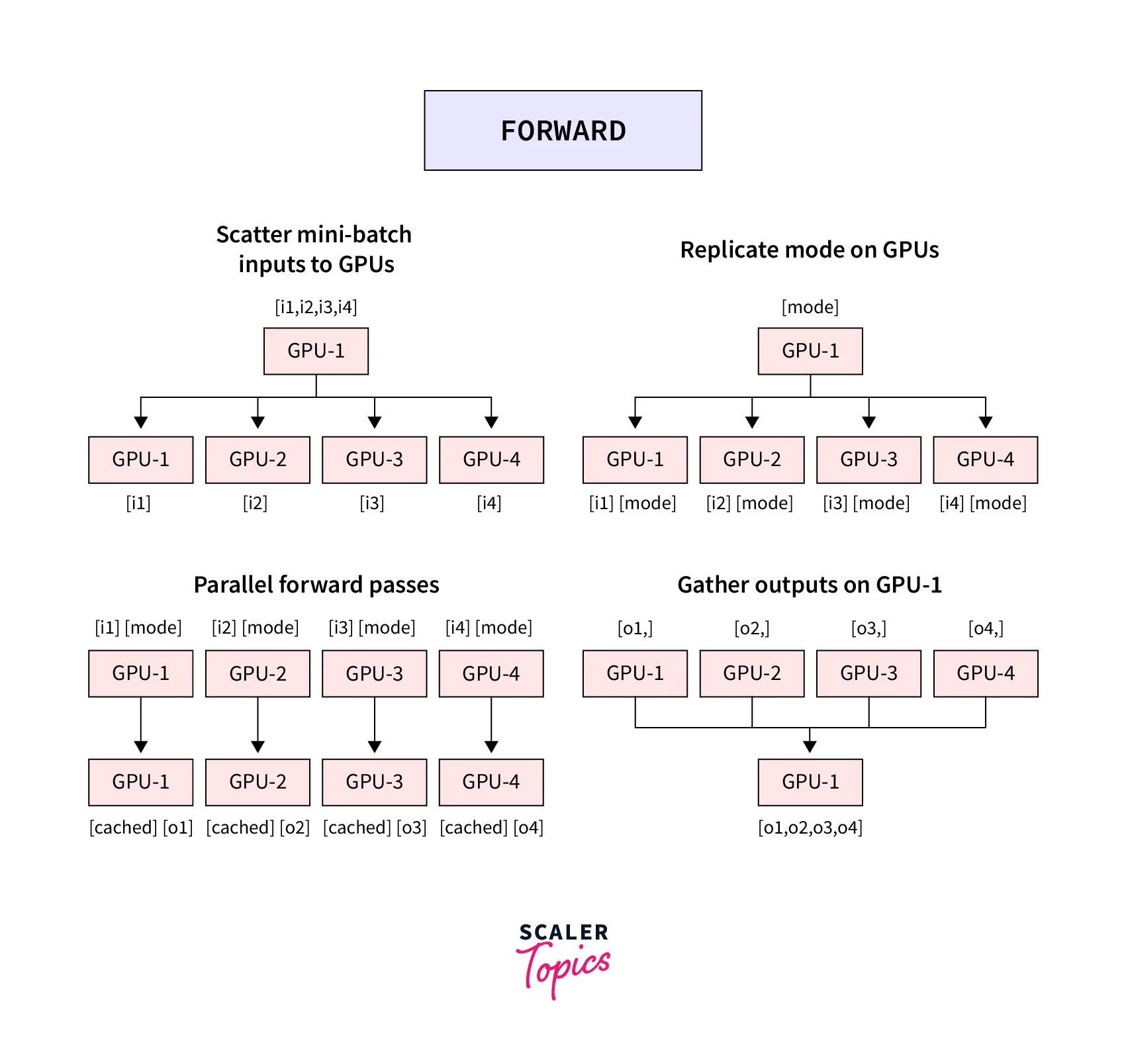

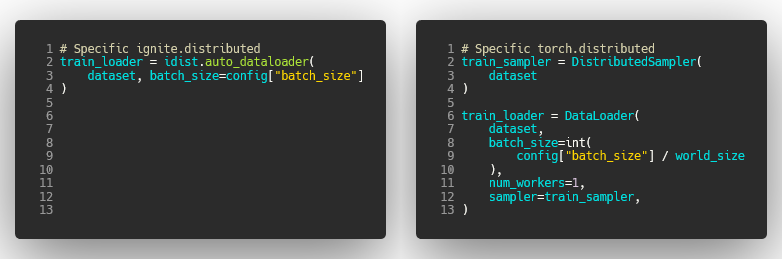

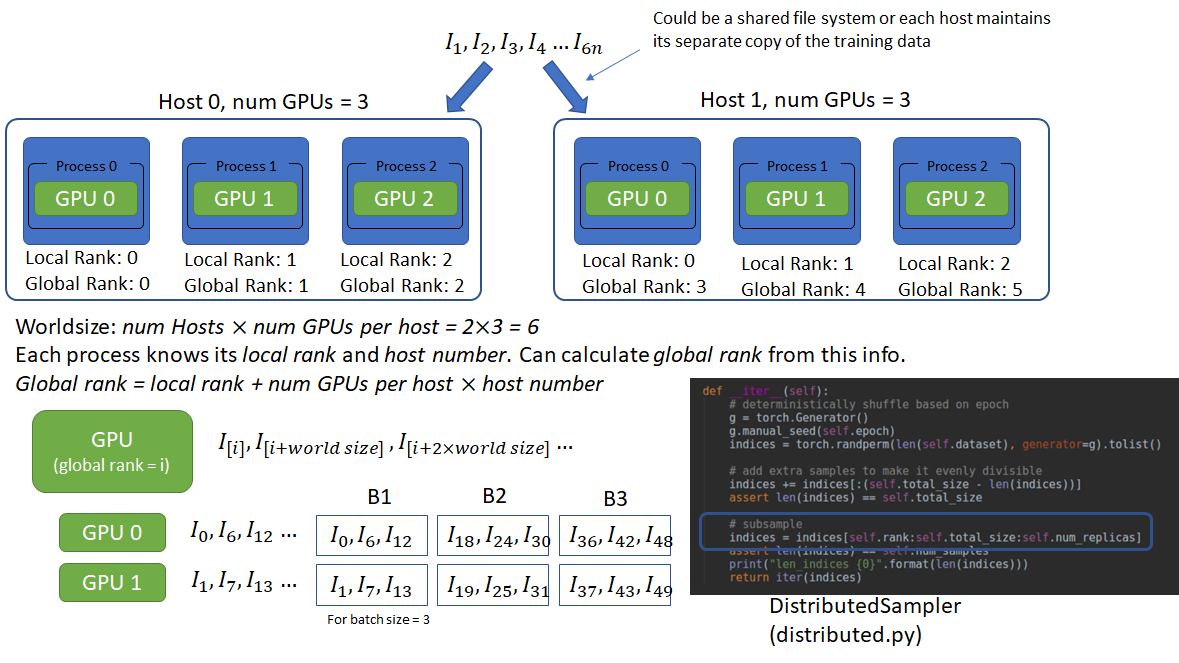

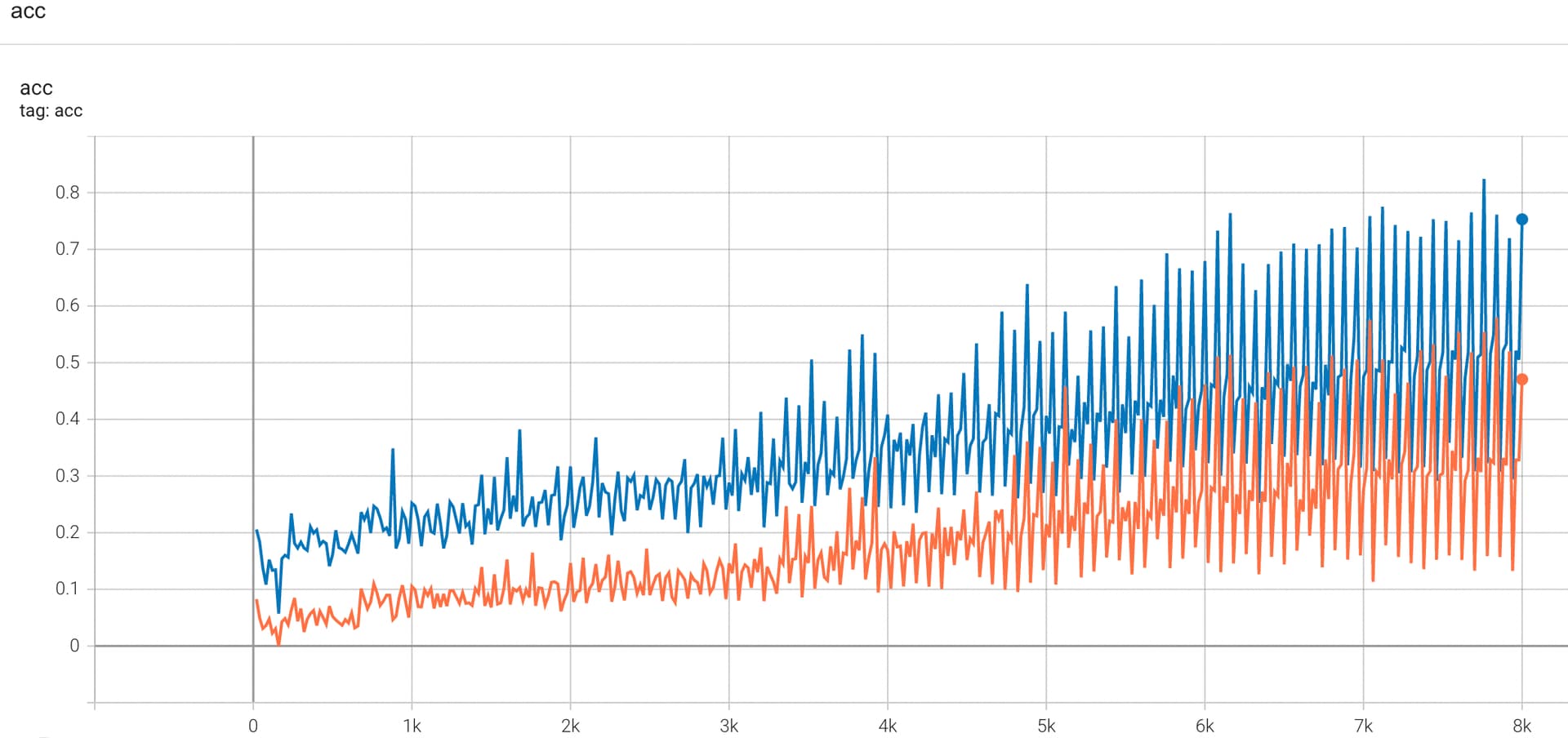

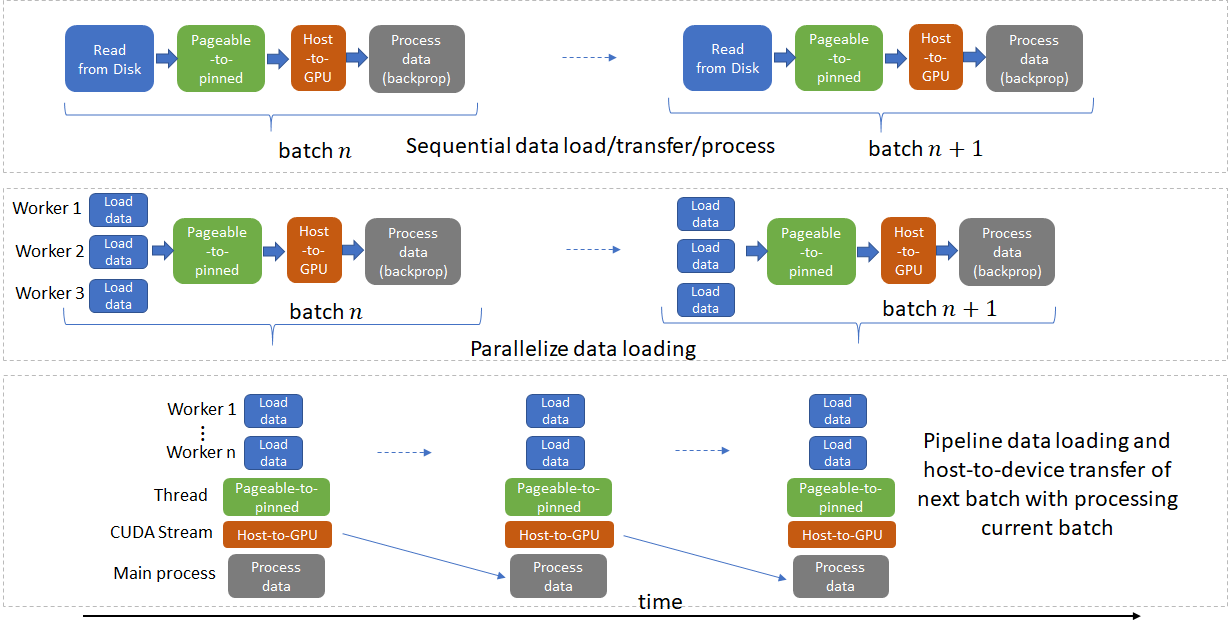

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer